| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

- Transformer

- trustworthiness

- fairness

- Tokenization

- 머신러닝

- 신뢰성

- 인공지능 신뢰성

- 지피티

- 챗GPT

- 케라스

- GPT-3

- 딥러닝

- Bert

- nlp

- 트랜스포머

- GPT

- 인공지능

- MLOps

- ChatGPT

- 설명가능성

- word2vec

- cnn

- gpt2

- 자연어

- 챗지피티

- Ai

- XAI

- DevOps

- AI Fairness

- ML

- Today

- Total

research notes

How GPT-2 and GPT-3 works? 본문

*** Jay Alammar blog 필요 부분 발췌 내용 ***

https://jalammar.github.io/illustrated-gpt2/

https://jalammar.github.io/how-gpt3-works-visualizations-animations/

The illustrated GPT-2

□ Looking Inside GPT-2

The simplest way to run a trained GPT-2 is to allow it to ramble on its own (which is technically called generating unconditional samples) – alternatively, we can give it a prompt to have it speak about a certain topic (a.k.a generating interactive conditional samples). In the rambling case, we can simply hand it the start token and have it start generating words (the trained model uses <|end of text|> as its start token. Let’s call it <s> instead).

The model only has one input token, so that path would be the only active one. The token is processed successively through all the layers, then a vector is produced along that path. That vector can be scored against the model’s vocabulary (all the words the model knows, 50,000 words in the case of GPT-2). In this case we selected the token with the highest probability, ‘the’.

In the next step, we add the output from the first step to our input sequence, and have the model make its next prediction:

Notice that the second path is the only that’s active in this calculation. Each layer of GPT-2 has retained its own interpretation of the first token and will use it in processing the second token. GPT-2 does not re-interpret the first token in light of the second token.

□ Model Output

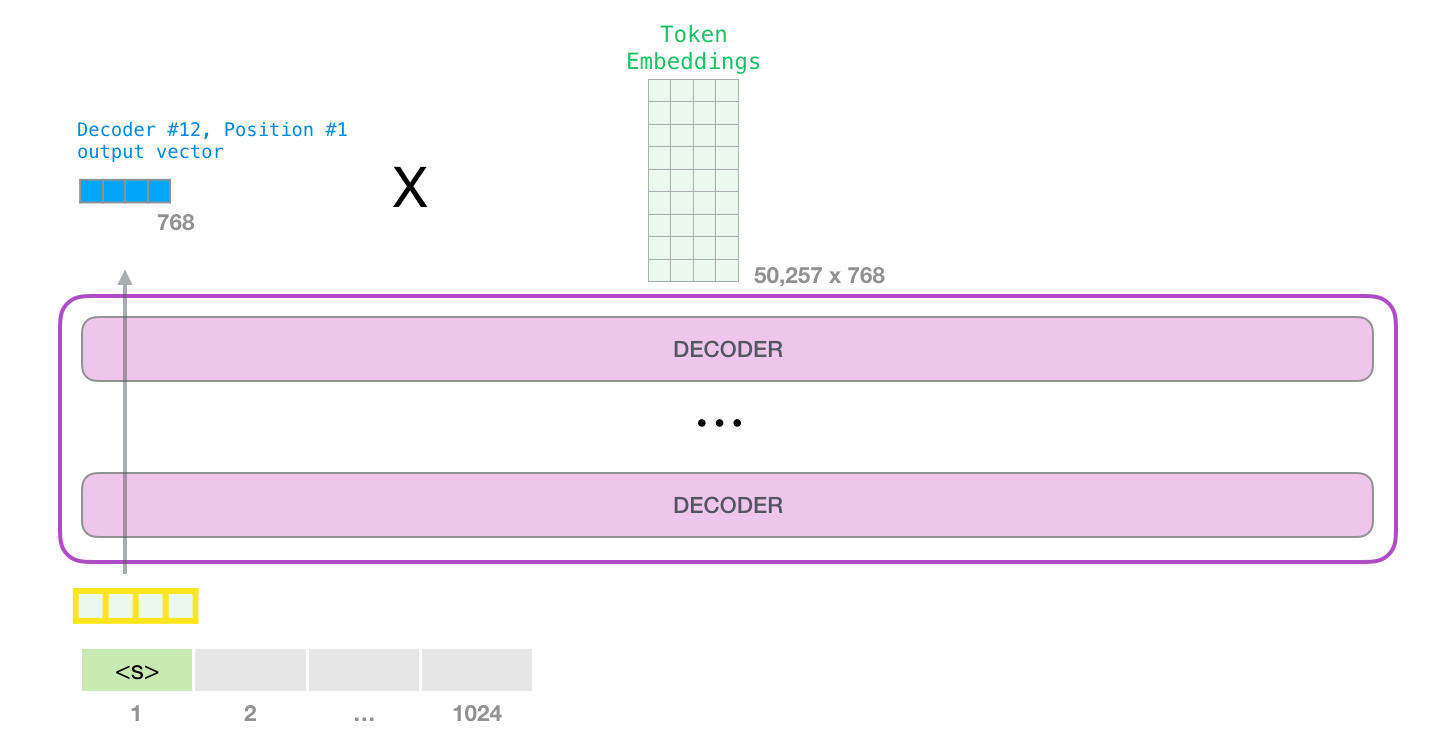

When the top block in the model produces its output vector (the result of its own self-attention followed by its own neural network), the model multiplies that vector by the embedding matrix.

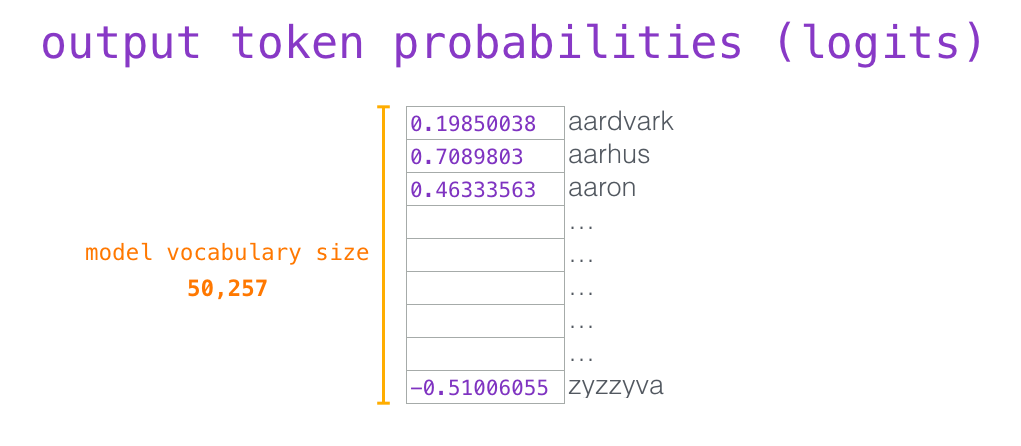

Recall that each row in the embedding matrix corresponds to the embedding of a word in the model’s vocabulary. The result of this multiplication is interpreted as a score for each word in the model’s vocabulary.

We can simply select the token with the highest score (top_k = 1). But better results are achieved if the model considers other words as well. So a better strategy is to sample a word from the entire list using the score as the probability of selecting that word (so words with a higher score have a higher chance of being selected). A middle ground is setting top_k to 40, and having the model consider the 40 words with the highest scores.

With that, the model has completed an iteration resulting in outputting a single word. The model continues iterating until the entire context is generated (1024 tokens) or until an end-of-sequence token is produced.

How GPT-3 Works?

The dataset of 300 billion tokens of text is used to generate training examples for the model. For example, these are three training examples generated from the one sentence at the top. You can see how you can slide a window across all the text and make lots of examples.

The model’s prediction will be wrong. We calculate the error in its prediction and update the model so next time it makes a better prediction. Repeat millions of times.

This is an X-ray of an input and response (“Okay human”) within GPT3. Notice how every token flows through the entire layer stack. We don’t care about the output of the first words. When the input is done, we start caring about the output. We feed every word back into the model.

'GPT > 개념정의' 카테고리의 다른 글

| OpenAI API 활용 (0) | 2023.10.29 |

|---|---|

| 프롬프트 엔지니어링(Prompt Engineering) (0) | 2023.02.28 |

| GPT 다음 토큰 선택 알고리즘 (1) | 2022.11.05 |

| BERT(Bidirectional Encoder Representation from Transformer) (0) | 2022.10.25 |

| GPT(Generative Pre-trained Transformer) Overview (0) | 2022.10.25 |